Table of Contents

- 0.1.What is Linescale?

- 0.2.What's so special about the Linescale consumer interface?

- 0.3.What's so special about the Linescale analytics?

- 0.4.How does Linescale compare to Conjoint Analysis or Max-Diff? How does Linescale diagnose which elements are contributing and which are hurting?

- 0.5.When should I use Linescale?

- 0.6.A word from Larry on On SUCCESS in Product Development

- 0.7.Linescale Vocabulary and Glossary of Terms

- 0.7.1.The Linescale

- 0.8.Linescale Scoring and the Linescale algorithms

- 0.9.Use of the Acceptor Segments

- 0.9.1.The key Linescale measure is Acceptor Group size, or the number of consumers who have a Linescore above 600 for the tested variable. The “norms” we present are really “guidelines” arrived at through inspection of many studies.However, for business understanding, the size, dynamic and trend of customers who are Dissatisfied (Indifferent and Rejectors) is an important predictor of business performance. Analysis of the reasons why customers are Dissatisfied provides clear direction for business improvement. This is true whether the business is in growth or "challenge" situations.

- 0.9.2.Linescale Rating Sets

- 0.9.3.Paired Comparisons

- 0.9.4.Verbatims

- 0.9.5.Driver Analysis

- 0.10.Norms

- 0.11.Why follow Linescale's interview protocol?

- 0.12.On Reading Linescale Reports - (Go To the Report - Analysis Wizard for detailed explanation)

- 0.13.Setting up the reports - Selecting the default sample base

- 0.14.On Coding Open End Questions with Filters

- 0.15.On Browsers to use when Linescaling

- 0.16.On whether a test item is a substitute for or a complement to the "Most Frequent" product in the space

- 0.17.On what adjective or adverb to use for selection of controls:

- 0.18.On gender quotas, particularly for client customer lists

- 0.19.On Cascading Quotas – Do not do it

- 0.20.On asking whether their personal score is About Right, or if they would Raise it or Lower it

- 0.21.On Using Pictures with Controls

- 0.22.On why we include "No other favorite" as a choice for the second competitive favorite?

- 0.23.On Research Types and Iteration for Development - (Go To the Setup Wizard to see all the test types)

- 0.24.On Customer Satisfaction/Dissatisfaction Tests for a brand, product or enterprise.

- 0.25.On The Importance of Precisely Understanding Dissatisfaction

- 0.26.On Deep Dive Customer Satisfaction/Dissatisfaction Tests

- 0.27.On Linescale Concept Tests

- 0.28.On Linescale Advertising and Communication Testing

- 0.28.1.Ad Test

- 0.28.2.Proposition testing

- 0.29.On Customer Satisfaction/Dissatisfaction - or, Listening to Your Customer

- 0.30.On Market Assessment

- 0.31.On Linescale Product Tests

- 0.32.On the four different measurements generated and reported using a Linescale - and how to use them

- 0.33.On the three different Linescale Scoring Types and generating Preference Segments

- 0.34.A Word on Segmentation by Preference Segmentation

Linescale started thanks to the genius of a Harvard Junior Fellow named Volney Stefflre who back in 1965 was referred to General Foods by the Harvard Business School. I was manager of the Maxwell House Division research department. This unique approach to measurement works so well for marketing research and product development, we've stayed with it and enhanced it over the years as I practiced my own product development work. In the early 2000's as Web use matured, we translated Linescale to the Internet.

The goal was to create a Full Service research system as easy to use as an ATM. The dynamics of Linescale, the Internet and many years of practical experience let us create an easy to use, powerful, fast and insightful set of tools.

Enjoy Linescaling!

[toc]

______________________________

What is Linescale?

Here's an overview: Linescale is an Automated Marketing Research System for Product and Business Development. Linescale delivers quantitative evaluation of consumer satisfaction for any business or brand element, and both quantitative and qualitative identification of the reasons for consumer satisfaction levels (good and bad). This enables decision makers to (1) assess the market, their business, brand and website position within it, strengths, weaknesses and opportunities. And (2) in development of improvements, new ideas and fixes, sort stronger ideas from weaker ones and at the same time provide direction for improving consumer acceptance of ideas, ads, products and service.

And, Linescale is as easy to use as an ATM.

Linescale automatically creates the interview and the report. All you have to do is tell the Linescale Wizard what the category is, who the competitors are, your bets about what people might like or not like. Linescale does the rest - and presents the interview to you for editing. You can add whatever custom questions you want to the interview, all of which will be analyzed by preference segment.

Linescale is inexpensive to use. If you wish, we will do it for you for modest additional cost. But Linescale is so easy and intuitive to set up, run and read, it needs no technical knowledge and almost no practice. Linescale's self-training demos let you try it out - or run tests immediately. And our help desk always stands by to guide if needed.

What's so special about the Linescale consumer interface?

Linescale consumer interface is superior because it creates a patented enjoyable interactive interview that engages the customer, rather than a "static" survey to be filled out. It is intuitive. Linescale asks consumers to make decisions the way they do every day - in comparison with their personal alternatives. It measures decisions rather than unfamiliar questions. And it gives people continuous feedback through the interview. Linescale is visual and enjoyable - we measure how enjoyable and accurate the conversational interview was for the respondent in every test. And Linescale is so efficient, we can keep interviews short (four to ten minutes) and respondents engaged.

What's so special about the Linescale analytics?

Linescale is a superior analytic system because it defines individuals who like or “accept” a tested thing versus who does not. Then Linescale provides a quantitative and a qualitative view of the drivers of acceptance/rejection so a tested variable’s consumer acceptance can be either improved by the Client or dropped from further development.

Linescale collects ordinal (win-loss decision) data. It is not subject to noise created by differing consumer response to survey questions. Therefore, smaller sample sizes generate better statistical stability. Anecdotally, you can see for yourself as you watch completed interviews stream into the reports.

The key Linescale measure is Acceptor Group size, or the number of consumers who have a Linescore above 600 for the tested variable. A person’s Linescore is calculated depending upon the study type and is covered here separately. Clients who use Linescale effectively use it iteratively and develop their own internal “norms,” which generally do not materially differ from the Linescale norms and action standards

How does Linescale compare to Conjoint Analysis or Max-Diff? How does Linescale diagnose which elements are contributing and which are hurting?

Linescale differentiates how well specific elements of the idea or concept, or enterprise or ad perform in contrast between Acceptors and Rejectors. It also identifies which test components are relatively strong versus weak by direct paired ratings on a single Linescale. Linescale shows you clearly what is working and what is not. Linescale does not try to tell you the percent impact of each variable. That is empirically determined in our system by making the indicated changes and running another test. We suggest this since Linescale typically is much faster and much less costly than traditional methods, including conjoint analysis. A better understanding of underlying strengths, key communication elements and result is provided with each Linescale iteration.

When should I use Linescale?

Linescale has many potential uses. It not only screens and sorts out ideas early in the development process, it is an excellent tool to define unmet needs and opportunities in a market space. Among other features, Linescale can define the strengths and weakness, the "white space" of the existing market space and unmet needs by probing consumer “points of pain.” It is an excellent development tool that can substitute for some/all consumer qualitative. And Linescale even offers superior Dynamic Moderator Guides to aid in the focus group process. A Linescale project can provide quantitative direction along with the consumer vocabulary explaining the “why’s” of the situation. Linescale can used to develop all Brand elements – position, names, ads, varieties, pricing, packages, promotions, web sites, etc.

A word from Larry on On SUCCESS in Product Development

The key to development success is ITERATE, ITERATE, ITERATE. Even Shakespeare wrote multiple drafts. In my experience, even the best ideas do not spring full blown like Minerva from the head of Zeus. Test, learn, fix, re-test, learn, tweak, re-test, learn and do not hesitate to keep trying to drive up your Acceptor Score. When do you stop? At Asymptote. Stop when you can no longer profitably improve. Not before, or you are cheating your customers, employees and stockholders - as well as yourself.

Linescale Vocabulary and Glossary of Terms

The Linescale

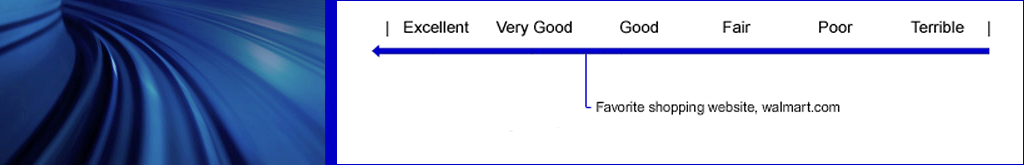

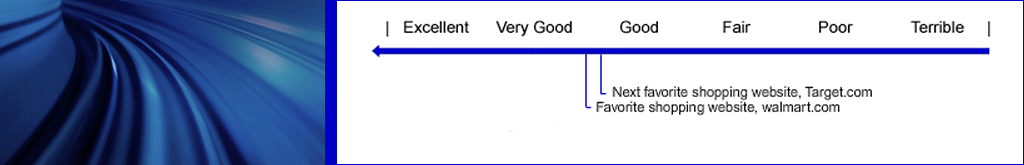

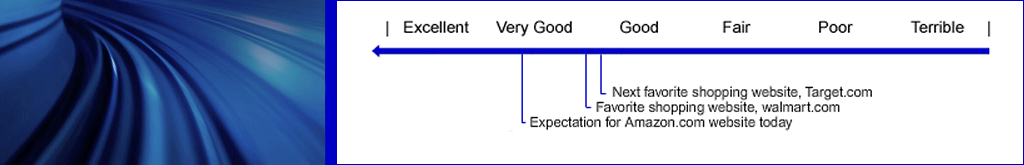

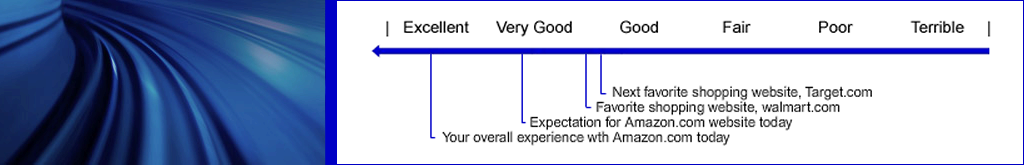

The Linescale provides a vehicle for each individual to make their own decisions about which business or brand element they prefer to their other possible choices. The Linescale generates two types of data – a mean score and a win/loss matrix (also called paired comparison data) that tells you how many times one item was picked over another.

Linescale quantitative evaluation of consumer satisfaction is based upon aggregation of individual choices. There is no “noise” deriving from different people using rating scales differently. The combination of ordinal data, self-normed scales, familiar anchors and multiple measures produces highly stable data. For large samples, you can be confident that smaller filtered sub-samples are stable. And for iterations, smaller total samples can be safely used.

Linescale Scoring and the Linescale algorithms

A Linescore is calculated for each respondent in a Linescale study. It is the measure that defines a respondent Acceptance level for the tested variable: the higher the Linescore; the higher the respondent Acceptance level.

Based on their personal Linescore, respondents are assigned to one of four Acceptance Segments; Acceptor, Borderline, Indifferent and Rejector.

The Linescore is calculated by combining seven measures as follows: two measures are paired comparison preference versus first and second “favorite” brands or practices in the market space; a third measure is paired comparison preference versus actual experience of the product, website, or ad with the expectation for the tested item - or if we are doing a concept test, the second, more detailed exposure of a concept is compared to and scored based on its preference to the initial impression of the concept; the fourth measure is a metric score on likelihood of recommendation; the fifth, sixth and seventh measures are metric scores based on ratings on three important variables. The paired comparisons enter very heavily into the scoring algorithm as does the Recommendation metric score. The ratings on the three (and sometimes two) "important variables" enter into the scoring, but less so than the paired comparisons and recommendation likelihood. These seven measures are combined into a single score real time via an algorithm. This score is called the Linescore and determines whether each individual is an Acceptor, Borderline (Interested, but not an Acceptor), Indifferent or Rejector. The range of possible scores generated by the algorithm runs from 200 to 800, although in extreme cases, a lower score than 200 is possible.

Linescale organizes all data by Four Acceptor Group Classifications:

Acceptors are those respondents that like the tested variable, are completely satisfied.

Borderline are either missing an important benefit or have one or two negatives.

Indifferent are generally unsatisfied by the tested item.

Rejectors ared extremely dissatisfied, and have serious issues with the tested variable.

Use of the Acceptor Segments

The key Linescale measure is Acceptor Group size, or the number of consumers who have a Linescore above 600 for the tested variable. The “norms” we present are really “guidelines” arrived at through inspection of many studies.

However, for business understanding, the size, dynamic and trend of customers who are Dissatisfied (Indifferent and Rejectors) is an important predictor of business performance. Analysis of the reasons why customers are Dissatisfied provides clear direction for business improvement. This is true whether the business is in growth or "challenge" situations.

Scoring Types

There are three types of Linescore algorithms:

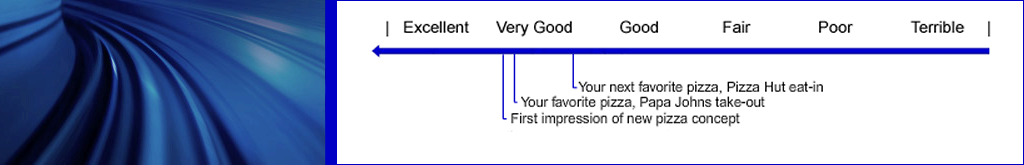

1. Type One Scoring is used when there is only a single presentation of a test variable, such as viewing an ad or measuring satisfaction with recent purchase.

2. Type Two Scoring is used when the test variable consists of two parts – a brief initial exposure t o a concept – a first impression is rated – followed by a more detailed presentation of an idea. It is also used in product testing when the product’s concept is first presented and rated, followed by an evaluation of the product. Type Two is insightful for concept and product testing because it considers respondent difference between the Impression (Core Idea) and Experience (Execution of the Core Idea).

3. Type Three Scoring is used for multiple alternative screening or exploring in which case an abbreviated, slightly less refined scoring method is used. This is an efficient way to test multiple items with excellent relative results, and reasonable accuracy of scoring. In Explore tests, Driver Analysis is also produced for each alternative tested. In the Types One and Two testing (Monadic Tests), seven scores comprise each rating. In a Type Three test (Proto-Monadic, or Sequentiual Monadic), five scores comprise each rating.

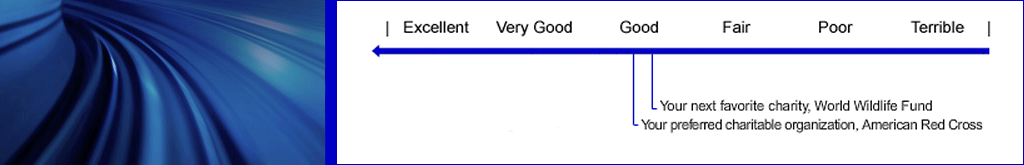

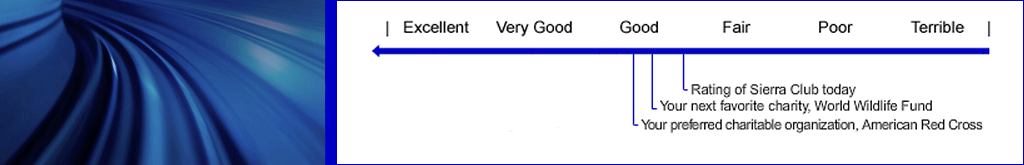

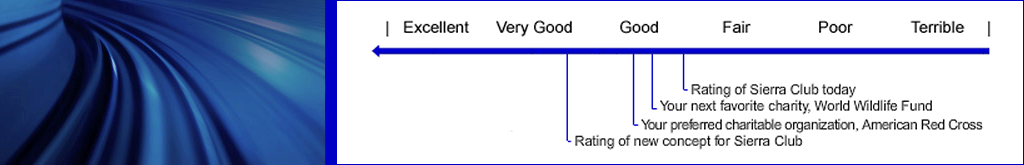

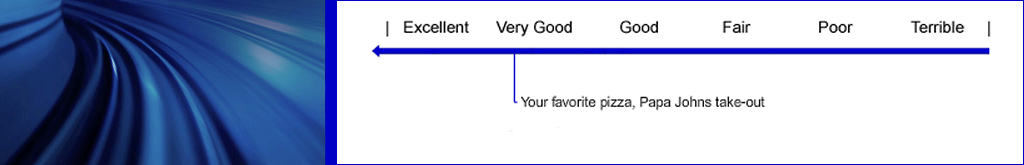

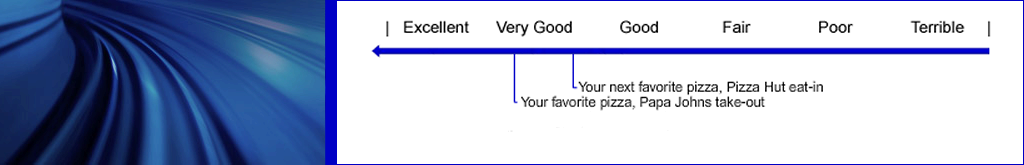

Linescale Rating Sets

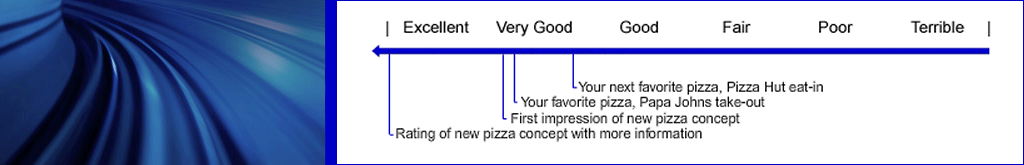

A Linescale Rating Set is defined as rating of two or more items on the same Linescale. The first item is rated by itself on the scale; the second is rated on the same scale compared to the first item's rating which remains visible and so on. A Linescale Rating Set generates three types of information from the same data point; (1) paired comparison data that defines how many times each item in the set is preferred to each other item, (2) a histogram, or frequency count, of what percentage of respondents marked each item under each of the descriptive labels or “boxes” used as Linescale guides, and (3) a mean rating.

Paired Comparisons

The paired comparison data generated by a Linescale Rating Set is a powerful tool to help understand which elements of a tested variable are strong and which are weak. This data also can be used as a development tool to screen and evaluate component building blocks for high scoring concepts, ads, product lines, etc.

Verbatims

A customer's response in their own words describing why they rated and scored a test item as they did is called a Verbatim. Verbatims are organized by Acceptor Group; from highest scoring respondent to lowest scoring respondent. Respondents essentially explain the decisions they have just made. This provides both salience od the possible reasons for rating (what is important to the individual) and granularity or "character" of the reasons for rating.

Respondents generally have a difficult time telling you why they like/dislike something, but after they have made their ratings, received their personal score, and told us whether they agree, would raise or would lower their score , the task is easier because the context is personal and specific.

Verbatims are extremely valuable in understanding why Acceptors prefer you to competition; why Rejectors hate you; and what issues exist with the Borderline and Indifferents. Verbatims are not edited. They provide direct customer language. Verbatims provide an excellent source of qualitative insight throughout a development process or a market exploration to help give the Linescale numbers additional depth of meaning.

Self-Coding of Verbatims

Immediately after giving their verbatim reasons for rating, respondents are asked to select up to five main reasons for their rating from a list positive and negative possible reasons for their rating. These lists are constructed from the client's experience and judgment, supplemented by analyzing the verfbatims from prior tests. These self-selected positive and negative reasons for rating are the basis for construction of the Driver Analysis.

Driver Analysis

A Linescale study that uses Type One or Type Two or Type Three scoring uses a “positives and negative” list. It is critical that a Linescale positive and negative list capture all major potential reasons for a respondent liking or not liking a tested variable. Respondents select up to five positives or negatives from this larger list.

Linescale shows the frequency of respondent selection of positives and negatives by total sample and by each Acceptance Segment.

The frequency defines overall importance to that segment. A unique analysis called a Driver Analysis calculates and summarizes the positives and negatives that differentiate each Acceptance Segment from every other Acceptance Segment.

The driver analysis calculates the difference in frequency of selection of each item by an Acceptance Segment and multiplies this difference by the larger percentage of the two Acceptance Segments selecting that item.

This shows the importance of the item in differentiating Acceptors from Rejectors, etc., and is a guide to improving the concept, product or enterprise, as well as a guide to development of marketing communications supporting the element.

Norms

On Linescale Norms and Action Recommendations

I developed these norms using two different empirical sources. The first is forty years of personally creating and developing three or four dozen products in varied mass markets for my clients’ accounts and for my own account. I used Linescale consistently in this process. The algorithm and scales were created, tweaked and refined over this period. Here's a quick list of the products I've developed or created over the years:

http://gort.net/ProductIndex.htm

The second empirical source is doing thousands of Linescale tests over that same time period for others in pursuit of their business goals. Since I was in a consultative role rather than a vendor role, I was able to observe both successes and failures associated with products, ads, concepts and marketing components with different scoring levels.

Linescale does not claim to be a test market predictor, but is in fact a superb development and assessment tool. Our norms and action standards are general guidelines that have proved very useful over time for a wide variety of companies. These norms and action standards also pass the test of "sensibility" when you examine how they are constructed.

There is solid rationale based on two criteria. One is the "Reasonable Person" rule. All scales that contribute to the scoring are quantitative and visible. Performance versus each consumer's favorite competitive alternatives is an important part of the scoring, and everything is done in plain English without "black boxes." Therefore, a reasonable person could understand the conclusions and recommendations based on looking at the individual measures that make up the Linescores used in Linescale.

The second criterion is statements and ratings by the respondents themselves. Not only do we ask people whether they agree with their scores, but we ask them if the test as a whole captured their opinion well, whether it was mixed, or if it was inaccurate. Most agree with their scores, and the overwhelming majority agrees the test as a whole captured their opinion well.

As an aside, there are not many tests in academia or the business world that do either, let alone both of these respondent validations.

Why follow Linescale's interview protocol?

On following the precise Linescale test sequence rather than free-lancing questions.

Linescale scoring has a precise programmatic sequence we need to follow. From the moment we begin to get controls until the end of the scoring screen, this cannot be interrupted. You are free to ask anything you want after the scoring sequence.

While you can ask and get answers to any individual question you choose, if changes are made to the programmed sequence, this will not be a Linescale test, it will be what we call an "ad hoc general survey." You can use Linescale to create and report ad hoc questions and answers, but there will be no Acceptor Score with Acceptors, Borderline, Indifferent and Rejectors and consequently, no Linescore comparison between tested items, and there will be no Driver Analysis. The verbatims simply will be responses to scale ratings rather than an engaged response to why they rated it as indicated by their personal Linescore - with the clear meaning of the Linescore, and thus the verbatims will be of lower quality.

Moreover, if you use Linescale simply as a general survey you will only see answers to questions by total and whatever cross-tab question or filter you use. You will see Linescale graphics, paired comparisons and mean scores on all tested Linescales for whatever filters you select in total. But no preference segments. I think you will be disappointed, but you are the client.

On Reading Linescale Reports - (Go To the Report - Analysis Wizard for detailed explanation)

There are a couple of unique features of the Linescale system I can point out to you. In a nutshell...

- Acceptors buy in to the concept, and their ratings of possible communication elements, such as appropriate names or logos for the concept, are usually more important than the element ratings of the total sample. This is also true for Van Westendorp pricing results.

- A single Linescale generates multiple measurements. The Linescale generates mean scores for each item, "box percentages" for each item, and more important, because each respondent places each item on the Linescale in context of all other items, Linescale provides a head-to-head paired relationship of each item versus each other item rated on the scale. This direct-paired measure is very reliable as well as generally more discriminant than metric average ratings.

- The Linescale paired measures "correct" for the normal vote splitting you get when you ask for first choice among multiple items. As you know, similar items split first choice votes and the two true top items can diminish each other. We give preference to the paired comparison win/loss tables over other measures. The Linescale graphics and means just show you where the mean locates on the Linescale. The pairs are true "people preference counts."

- There are very useful verbatim comments that can only be viewed on the individual test reports, not on the combined reports. Click on the Verbatims tab, then click on "open all to see details".

On Opening VERBATIMS details:

The comment in the gray bar at the top is by default the comment made to indicate why a person rated overall as they did.

When you open the verbatims you will see visible to the left, the name of the question for which this is the answer. You can map that against the “little question name” always visible in the editing mode of the interview.

Setting up the reports - Selecting the default sample base

Linescale "Set up the reports" features a toggle that lets you change the sample composition from Linescale's traditional "Scored whether or not completed" to showing "Completed only", which includes only those respondents who clicked "Finish" on the final screen.

The "Scored" number is a standard count we show since it is the criterion for many tests, particularly for those with upfront screening. A respondent is counted as "Scored" when they complete the control and test scoring sequence, the algorithm score has been calculated and respondents are presented their personal score. Completed means they reached the end of the interview and clicked "Finish". Completed does not necessarily mean all non-required questions were answered. Each individual table reports the number of respondents who answered that question.

Below are three pix that illustrate how to use the toggle. They also as show some additional features you can use to customize your reports.

Steps to change sample base toggle:

1. On your Client menu, click any test name and the test menu opens. Select "Set up the reports".

2. From the "Set up the reports" menu, select "Default filter and segmentation".

3. On the "Default filter and segmentation" menu select either "Show scored but not complete" (our default setting) or select "Show completed only". Click "Done".

4. Refresh the report and the new settings will establish.

You can also archive this or any filtered report from the "Change Report" menu located on the top right hand side of the full report itself.

As you can see from these menus, you can change the default filter to any one of your existing filters, and you can change the Acceptance segmentation from four segments (our default segmentation) to three which combines the bottom two "Disappointed" categories.

| Set up the reports |

| Default filter and segmentation |

| Show completed only (clicked Finish) |

On Coding Open End Questions with Filters

An alternate use of filters is to "code" the presence of a word or name in an open-end answer. A typical use is for unaided awareness questions, such as "What is the first brand of coffee that comes to mind?" If you are interested in tracking Maxwell House, create a filter with all possible spellings of Maxwell House Coffee. Applying this filter will give you an immediate count of the number of respondents who wrote in a variant of Maxwell House in answer to that question.

On Browsers to use when Linescaling

Please use Firefox (or Safari if Mac) for Linescale stuff. It is industry standard. AOL and IE are proprietary standards.

On whether a test item is a substitute for or a complement to the "Most Frequent" product in the space

We do not actually ask respondents whether they would choose an item either over or substitute it instead of their current most frequent choices in the space. The "favorites" are there as a "reference" to "anchor" and normalize the scale to that individual. We READ the relative ratings of the test items and favorites and make a determination of relative preference. But, the important point for your case here, is that the respondent is NOT asked to rate the test item VERSUS his favorite.

In some cases it might imply substitution; in others, complementarity. The rating just shows you where it fits in the preference space in comparison with the known quantities. Good judgment will serve to tell you whether the implication is complementarity, as in condiments or cereals, (non-zero sum) etc., or whether it is substitution, as in political candidates or choice of school (zero-sum).

On what adjective or adverb to use for selection of controls:

The Setup Wizard uses "most frequently" in selecting the controls.

I once used "favorite" as a selection adjective, but "favorite" can mean too many things to people, including something so pricey or unavailable they never use it. So I use "most frequently" for all categories, and give you the choice of the appropriate verb. The verb might be buy, use, go to, purchase, indulge in or whatever makes sense.

Once you have the verb, you can add a modifier. For example, for utilitarian categories such as hotels for business travel "most frequently stay at when traveling on business" works well to identify a solid and consistent competitive control.

The important point is respondents get the idea Using "most frequently" as the adverb for the competitive alternative prompt, respondents pick something they use, are okay with, and most important for Linescale purposes, they are familiar with and can give a solid rating to it as a benchmark on the Linescale.

On gender quotas, particularly for client customer lists

Larry Linescale urges you to consider an important point regarding managing segments and quotas. Enforcing quotas can upset your customers because many people will be invited to participate but prevented from doing so because certain segment quotas may need to be filled and others are closed.

Exact quotas are far less important than they used to be. Exact quotas used to be important in traditional research methods because of the high variable cost per interview. In Linescale, when you are using your own lists, there is no variable cost to conduct the interview.

For analysis, every table, including gender and other demographics, reports findings by % Acceptors, Borderline, Indifferent and Rejectors. Because of this detailed default analytic view, the total sample does not have to be balanced for every demographic segment. Further, simply putting on a male filter or a female filter gives results for each on every question, regardless of sample size imbalance.

I recommend you don't irritate your customers by excluding them because of quota restrictions. It doesn't hurt to let them take the interview.

On Cascading Quotas – Do not do it

We ourselves do not do cascading quota management, and strongly recommend against clients using this technique. This is a data quality issue. We believe the process of establishing and enforcing cascading quotas distorts sampling unreasonably. We strongly recommend getting a larger sample and “oversampling” on certain segments in order to get sufficient sample in the smaller, or secondary segments.

On asking whether their personal score is About Right, or if they would Raise it or Lower it

Net; we ask respondents, but we ask to be sure they feel we understand their opinion. We do not change their score.

We generally expect a two or three to one ratio "raise it" versus "lower it", and well over half "No change". My experience over the years has been there is a persistent testing bias that leads respondents to want to be gentle, or polite to tested products. The phenomenon is called, "Yea saying" in the research trade. It is true of all kinds of research. People want to please the interviewer, they want to look intelligent, they want to be on the winning side and they want to "be loved" themselves. Whatever the reasons, because of this bias, no sophisticated researcher takes responses literally, as in "I definitely would buy it." That really just means, "I think I like it a lot." You can even find paradoxical ratings sometimes, where high ambiguity leads to more positive ratings than expected.

The keys to our more precise estimates are that (1) we test versus each respondent's real current competitive alternatives, and (2) we use an algorithm to aggregate a number of important ordinal and metric measures. We have reviewed thousands of interviews over the years, and the pattern of quantitative responses and verbatim comments leads me to believe the algorithm more than the person's statement of how much he likes something. We are comfortable with our "interpretations".

Also, we actually use a wider range for our four categories of Acceptors and Rejectors than the six finer-grained categories we show respondents for their rating.

Maybe there is some insight in a high "raise it", such as, "I really like the general idea and wish this concept well". That is nice, but we are more interested in whether a person is willing to substitute it for what they are currently doing.

On Using Pictures with Controls

Does the Linescale System allow for using pictures to help identify controls? No. The reason is that it is okay to mix apples and oranges (brands versus practices) in the favorites list, but we need to keep the controls’ presentation medium constant. Think of favorite celebrities. Names alone will give a more reliable selection or ranking of favorites than showing pictures of all or some celebrities, since the pictures introduce an executional element that can bias attitudes.

The purpose of the favorites list is to elicit familiar known and favorable benchmarks to anchor scales and score against. Therefore, we have designed our system to accept "flat" words only in the favorites lists.

We encourage creativity of all sorts in the presentation of the concepts, experiences and items to be rated themselves, but not the controls.

On why we include "No other favorite" as a choice for the second competitive favorite?

We give respondents an opportunity to select "No other favorite" for the second competitive favorite choice. This is to not force an irrelevant choice in cases where the respondent chooses to use only one competitive alternative.

We change how this choice is phrased in the later prompt, where the respondent is asked to rate his second favorite. For example, "No other online source" would be changed to a prompt alias which asks the respondent to rate "not using another online source" as a choice for the second competitive favorite.

This works well and respondents have been successfully rating "no other X" across thousands of studies. It consistently gets rated just below the rating of their first favorite. Two choices, whatever they may be, are necessary for the Linescale scoring system. The reason we use this phrase is that if there is no legitimate second choice, respondents select "other" and write in "none", "n.a." "I don't use another source", etc. These are all less appropriate to rate on the rating scale than our recommended alternative structure, "not using another online source"

This works well and respondents have been successfully rating "no other X" across thousands of studies. It consistently gets rated just below the rating of their first favorite. Two choices, whatever they may be, are necessary for the Linescale scoring system. A reason we use this phrase is that if there is no legitimate second choice, respondents select "other" and write in "none", "n.a." "I don't use another source", etc. These work, but are slightly less appropriate to rate on the rating scale than our recommended alternative structure, "not using another online source". But, it is not critical.

On Research Types and Iteration for Development - (Go To the Setup Wizard to see all the test types)

Package Testing: Monadic, in-depth testing is usually best. We add a screening option, where clients can do a quick screening of multiple options and decide which to eliminate, which to focus on, and get some clues as to what the optimal package might be based on the pattern of acceptance/rejection. This should add another arrow to your quiver.

Name Testing: We have a very good system using either the Hi Res Explorer or Lo Res Screener format for testing names - and even logos. This is an inexpensive and fast way to get an Acceptor Score for each name or name/logo combination, and the paired preference. Fundamentally, we benchmark the concept or positioning the name is targeting or representing, then test alternative names for:

-Overall appeal

-How well it fits with the concept

-Perceived ease of pronunciation - which correlates highly with memorability

-Distinctive or unique

-If there are only a few names we would add an additional question for each name; "How many positive things are called to mind when you think about this name?"

Pricing: We believe the Van Westendorp in combination with our Acceptance Segmentation, is the single best pricing test out-of-market. We occasionally follow the VW sequence with a pricing "cascade" where a series of descending specific prices are presented with a buy/not buy option. This is most often done where the competitive price points are well known or there are product go/no-go pricing decisions to be made based on costs, etc.

Communication Testing: We have a very solid ad/video/board evaluation testing that measures persuasion versus competition and movement over current brand positioning. This is fully automated. We have another "proposition screening" test where a number of support ideas, messages or even basic selling ideas themselves can be tested on several criteria and an Acceptor Score is generated for each proposition. This test shows rank orders, percent score distribution in each scoring "box", percent wins over competition, metric Linescale graph ratings and mean scores for each proposition.

Opportunity Exploration: Any Linescale test can be used to test out future ideas on a Rating Set versus a control, but Concept Tests and Market Assessments are the best venues for opportunity exploration. The Driver Analysis in any test is helpful, but particularly in Market Assessment where the category as a whole reveals its strengths and weaknesses. You can even "test your bets" within the same test by analytically running individual brands to observe their patterns of strength and weakness. Adding a "Points of Pain" sequence is another good exploratory source, and this can be added to any Concept Test or Market Assessment. A general feature of all Linescale tests is the verbatim comments generated by respondents who are asked, "Why did you rate" as they did. This tends to be a positive "lever' to cause people to dig down into rationalizing their preferences and rejections. This tends to yield verbatims reflecting what is salient as well as discriminant for an individual.

We think of every Linescale test as a step on the road to identifying and maximizing opportunities. This is why we stress "Iteration", or learning, creating a new hypothesis and testing the new hypothesis. (This is shorthand for what academics call, "The Scientific Method".} The hypothesis, of course, can be a market opportunity in the form of a new concept, or it can be a product feature or enhancement or a message.

On Customer Satisfaction/Dissatisfaction Tests for a brand, product or enterprise.

We establish the favorite alternatives to the brand in question. These brands are then rated on the Linescale. Original expectations for the brand or current general impression of the brand are rated on the same Linescale, thus generating paired relationships between each item on the Linescale. Recent experience with the brand is then rated. This gives comparison of recent experience with the brand versus original expectations and versus each of the alternative competitors.

The test identifies current satisfaction levels based on recent experiences and includes assessment of the brand on key variables and likelihood of recommending the tested brand, product or enterprise to others.

Four Preference Segments are established with percentage of customers in each segment and driving reasons for preference or dissatisfaction measured qualitatively and quantitatively. Specific action recommendations on what needs to be changed to reduce dissatisfaction and customer loss is made. Brand strengths are identified and sources and reasons for dissatisfaction are explored in depth.

On The Importance of Precisely Understanding Dissatisfaction

The immediate revenue benefit for the business is plugging the leaky bucket. An 8% annual decrease in dissatisfied customers walking out the door translates to a 30% increase in volume over three years with no marketing expenditures. It is much cheaper to satisfy current customers than to generate new customers. Fixing sources of dissatisfaction also increases the likelihood of converting new customers into loyal customers.

Linescale measures Satisfaction as well as Dissatisfaction, but we do it in an actionable way. Specific action steps are indicated to move the slightly dissatisfied to fuller brand participation, and to understand what steps need to be taken to prevent or alleviate extreme dissatisfaction among your customer base. Linescale focuses on specific action steps for action, not just a report card.

Linescale also has complete systems which cover all aspects of a business in an integrated "Management Dashboard" way. For the e-tailer, for example, Linescale not only assesses your Web site, but your print catalogs, your advertising, product mix, display techniques, specific product usage satisfaction and reasons for non-use by non-users, etc. are all measurable by the automated, powerful yet inexpensive Linescale Expert Systems.

On Deep Dive Customer Satisfaction/Dissatisfaction Tests

A Customer Satisfaction study takes an "across the breadth of the business" look and identifies previously unsuspected problem areas. We take the learning and issues uncovered in the basic Customer Satisfaction study, structure another Customer Satisfaction interview focused on that area and do a deep "dive" into customer experience (as opposed to an initial broad cruise across the surface). This is an effective depth probe into understanding a sub-market.

How do you do a Deep Dive? First we do an exploratory to further clarify and identify issues within the area, then we go for quantification of the issues and identifying sub-segments in follow-up iterations. This is all followed by coming up with "fixes" we then test in our development suite.

On Linescale Concept Tests

In early stages of product development and concept screening, we recommend first doing a Market Assessment. (See Market Assessment below) A Market Assessment is a discovery experience which gives a snapshot of the marketplace, brands and product shares, reasons why the favorite brand is the favorite brand - and weaknesses and shortcomings of the favorite brand. Consumers verbatim reasons for rating their favorite as they do provide insight into salient issues in determining brand choice, and consumer language describing the issues.

This information is very helpful in ideation and creation of meaningful concepts.

Screeners sort out a large number of alternatives

Our Low-Resolution Screeners score up to ten or twelve concepts of one or two sentences in a similar space. They are relatively rated and ranked versus each other on an overall Excellent/Terrible scale plus three other scales. Minimal information is gathered on why the poorer ideas are rejected. The Low-Resolution Screener is typically used to screen many early ideas.

Select the best from the good candidates

Our High-Resolution Screeners give more depth of learning on a smaller number of candidates, and gather preliminary development information for the winners.(1) Three to five fully described concepts are evaluated with scoring approximating full Linescale scoring. (2) Diagnostic information including verbatims is gathered for each idea. (3) Used as a cost efficient method of honing in on a final concept to take into full development.

Assess and Develop a single concept (Monadic Concept Test)

The Monadic Concept Test, in my judgment, is the single most powerful tool for effective product development. It is used for both concept go/no-go decisions as well as for iterative development and improvement.

- One concept at a time is tested for maximum evaluation precision and full diagnostic feedback.

- The Monadic Concept Test is also is used to test multiple names for a concept, and to test multiple package variations.

- Using Linescales, multiple product components and combinations of components such as ingredients, flavors, service standards and conditions can be described and tested in text or graphics form, similar to conjoint testing with the advantage of each element directly compared with all other elements.

- Price testing via Van Westendorp This estimates the price point at which Concept or Product ACCEPTORS (those likely to be in the market for this product) find the product begins to be too expensive for consideration.

- Likelihood of trial, probability of repeat and depth of repeat questions can be added as well as use-occasion estimates.

- The key to development success is ITERATE, ITERATE, ITERATE. Even Shakespeare wrote multiple drafts.

On Linescale Advertising and Communication Testing

Ad Test

(1) Optimizing and Evaluating a rough or finished communication, either (2) print ad, storyboard, video, commercial or infomercial. (2) Respondents rate current impression of brand. (3) Communication is viewed and rated. (4) Full diagnostics, key points communicated and specific attributes are measured. (5) Quantitative and verbatim.

Proposition testing

(1) Up to ten or twelve selling points are relatively rated and ranked on an Excellent/Terrible scale. (2) Each respondent's most preferred proposition is then presented as the key communication idea, inserted into the concept and fully scored, including full diagnostics and verbatims. (3)) Used to screen alternative selling points or propositions. (Identical format to Screening of multiple concepts)

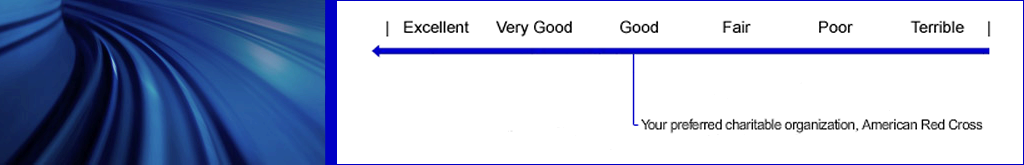

On Customer Satisfaction/Dissatisfaction - or, Listening to Your Customer

Website Assessment

(1) Visitors to either home page, landing page or daughter website rate expectation for the site today. (2) Interview is paused and suspended while visit is completed. (3) At conclusion of visit, interview is resumed. (4) Measures are taken of actual experience with the website. (4) Full diagnostic evaluation including verbatims and reasons for rating. (5) Specific activities or information either intended or completed are identified. (6) All activities either intended or completed are evaluated. (8) Transactions or other revenue producing functions are assessed and evaluated and likely alternative intentions assessed. (7) Future activities or information desired can be assessed as an add-on.

Customer Satisfaction with Enterprise/Brand

(1) Current and/or past customers are invited either by email or web intercept. (2) Original expectations are measured. (3) Current or recent experience with brand is identified and measured, including (4) full diagnostics and verbatim reasons for evaluation. (5) Departments, functions, products or features used are identified and evaluated. (6) Specific characteristics or attributes of relevant departments, products, services are measured.

On Market Assessment

What is a Market Assessment and when is it used?

A Market Assessment is a test which discovers brand or product usage in a market, the benefits of each brand and strengths, weaknesses, threats and opportunities in the market space.

Here's how it works:

Consumers select three favorites from a broad list of brands and practices in the market. They select one at a time - most recently used, next favorite, third alternative. Next, consumers rate their 2nd and 3rd choices as benchmarks. The brand selected as most recent choice is then presented in two parts. Consumers are asked to rate their ORIGINAL EXPECTATIONS for their current choice, and then rate their CURRENT EXPERIENCE with it.

The current or most recent - or test item - is then rated on three important brand variables (defaults might be Uniqueness, Value for the money, Importance to them) and Recommendation. This produces an ACCEPTOR score for the First favorite.

We report an aggregated score, no matter what was in first place. However, by running filters for each specific item selected as the favorite, you get the Acceptor score for that item. Any item that is picked first from the list will have an ACCEPTOR score generated for it. This is reported as an AGGREGATED Acceptor score not the score for any one individual item. We do get an Acceptor score for EACH item that was picked as a ‘favorite”, and with a large enough sample, each of the larger brands will have enough consumers who select it as favorite to yield an ACCEPTOR Score for that brand.

We typically suggest a sample size of at least 1,000, so an item with 10% selecting it as favorite will have an N=100. You will find out what the important - and lacking - variables in the available choices in the market are from the Driver Analysis. This can be very good for mapping.

Consumers are asked an open ended – "Why did you rate the concept as you did?" This verbatim response is reported separately in descending order of score for the favorite.

Consumers are also asked to select POSITIVE/NEGATIVE reasons for their ratings. A quantitative DRIVER ANALYSIS chart is produced for the Aggregated Favorite.

With filtering by Favorite, a Driver Analysis is obtained for each of the larger brands individually.

With a sufficiently large sample size - enough to yield at least 100-125 users for each brand, you would have a complete filtered report for each first favorite, including what preferences by users of each brand are for any concepts or ideas that might be included for screening later in the test.

Market Assessments often include a probe of unmet needs or unsolved problems.

Respondents are given a list of potential problems and asked to select their top five and then asked why they picked the top five as they did. (This is referred to as “points of pain” within a market space.) Also, respondents can be asked to tell us what their biggest problem is in the market space and how they would want it solved in an open end question.

On Market Assessment Sample Size

Market Assessment, particularly with Linescale Favorite Focus, is new to many clients, so I think it best to clarify a few points.

On Linescale Product Tests

Product Tests are only offered to clients equipped to manage the logistics of the process

Product Test with Preliminary Concept

(1) A concept and a product, either a physical product, or access to a service or application are concurrently delivered to target group respondents. (2) Respondents first rate the concept, (3) then all respondents try the product and rate the product compared to competitive frame and personal expectations based on the concept. (4) Full diagnostic information on the product is gathered, (5) including all product features and (6) areas for improvement of the product. (7) Van Westendorp pricing will establish expected and maximum price range for the product. (8) Trial, repeat and usage occasion information is gathered.

Acceptor Product Test

(1) Identical to a Product Concept Test except that (2) a full concept test is done as an initial step. (3) Respondents who are Acceptors (highly likely to try the product) are invited to try the product in a follow-up interview. (4) Product is then delivered to Acceptors only. (5) Used where physical distribution is difficult or product quantities are limited.

On the four different measurements generated and reported using a Linescale - and how to use them

Linescales are reported four different ways, all using the same raw data. Linescale reports Paired Comparisons, Average Paired Comparisons, ""Box Scores" and Mean (metric average) Ratings on a six-point scale basis. Explanation:

1. Paired comparisons Linescale is unique in that it measures pairs as well as metrics. Because all items are placed on the same scale, the placement of the second item establishes a paired comparison relationship to the first item. Placement of the third item establishes a paired comparison relationship with item one and with item two, etc. This is a very granular and reliable measurement and we use it in our scoring algorithm as well as showing it for all rating sets. This is our preferred measurement.

2. Average paired comparison Since we generate a paired comparison "matrix" which shows the paired relationship of each item on the linescale to each other item, we can generate an average paired comparison score which is simply the arithmetic average of all possible pairs for each item. This is a very stable measure.

3. "Box percentage" Linescale also reports the percentage on clicks "under" each scale guide, as if it were a physical box checked. This, in effect, treats the linescale as if it were a radio button with a discrete measure. This is done for single linescales that are not not part of a rating set. This is reported as percentage under each scale guide.

4. Metric mean scores Means are calculated as if the scale were a six-point scale, without regard to the number of scale guides above the line. Means are not our preferred measurement, since, as in all metric scale ratings, extreme ratings, particularly extreme negative ratings, drag down the mean score. Typically people use statistics to ameliorate the effect of the outliers. Linescale has better measurements (see 1., 2. and 3.) available. We use the mean scores to create the linescale graphic which shows an aggregate linescale rating for whatever filter or segment is being analyzed.

Technical discussion: Since linescale is a finely gradated measurement, each of the six "points" is actually a large number of points. The center of each scale guide is the "center box value." For example, the center of the third box is 3.0, and as you get to the top of the third guide, the value increases to 3.49. The bottom of the third guide "box" is 2.50. Similarly, the center of the 6th segment of the line is scored a "6," and if the very top (highest position on the sixth segment) is clicked, the value is 6.49 from a metric perspective. The highest possible rating of 6.49 could only be achieved if every respondent clicked at the very highest pixel under the "top box," at the extreme left of the linescale.

On the three different Linescale Scoring Types and generating Preference Segments

Linescale tests are unique in that each test automatically generates a Preference Segmentation which categorizes each respondent as one of four segments; Acceptor, Borderline, Indifferent and Rejector. There are three testing algorithms Linescale engages to do this. We call the algorithms Types 1, 2 and 3.

- Type 1, 2 and 3 Scoring.

- Type One. A single stimulus does the scoring. It is scored versus Favorite, Second Favorite and “Pre-rating,” if the pre-rating is current impression of the brand, original expectation for the brand or expectation for the website. Type One scoring is typically used for Ad Testing, Customer Satisfaction/Dissatisfaction, Market Assessment and Website Assessment.

Seven measures are used in Type 1 scoring:- Experience versus Pre-Impression

- Experience versus Favorite Competitor

- Experience versus Next Favorite Competitor

- 4 Scoring Variables, including Recommendation

- Type Two. The stimulus is broken into two parts. Part A is called “Concept,” part B is called “Experience.” The Concept can be the Headline and summary benefits of a concept in a concept test, and the Experience a restatement of the idea with more information about it. The additional information can include a more complete listing of benefits and sub-benefits, support, pricing or other features. In a product test, the Concept is a drscription of the product they are about to experience, and the Experience is either the physical product or service.

Seven measures are used in Type 2 scoring:- Concept versus Favorite Competitor

- Concept versus Next Favorite Competitor

- Experience versus Concept

- 4 Scoring Variables, including Recommendation

- NOTE: Type 1 and Type 2 scoring gather the data in exactly the same way. Consequently, a Type 1 test can be recalculated to view results with Type 2 scoring and vice versa. The difference is simply whether or not the "pre" measure is scored versus competition or is only scored against by the Experience. This "values" the pre-measure of the brand. This alternate view can be useful in certain Customer Satisfaction tests. The "Change Report" dropdown menu on the online full report allows a toggle of Types 1 and 2.

- Type Three. Type 3 scoring gathers data differently from Types 1 and 2. Type 3 is used to generate Preference Segmentation for multiple concepts tested in the same test. Because of possible respondent fatigue and other reasons, the measures are limited, and the results have more "volatility" and less granularity than a pure monadic (single) concept or product test.

Five measures rather than seven are used in a Type 3 test:- Concept versus Favorite

- Concept versus Next Favorite

- 3 Scoring Variables, including Recommendation

- Type One. A single stimulus does the scoring. It is scored versus Favorite, Second Favorite and “Pre-rating,” if the pre-rating is current impression of the brand, original expectation for the brand or expectation for the website. Type One scoring is typically used for Ad Testing, Customer Satisfaction/Dissatisfaction, Market Assessment and Website Assessment.

A Word on Segmentation by Preference Segmentation

Segmentation by Preference Segment

Linescale focus is on segmentation by Preference Segment. This is the reverse of how market segmentation is usually done. Usually, the analyst looks at answers to attitude questions, demographics, preference for various attributes, etc., and tries to cluster those into clumps of attributes or reasons for rating that "hang together," that is, are correlated in some fashion. There is art in doing this as there is no standard for "how many" clusters there should be. Typically an analyst will look at a "four segment solution," a five segment, a six, an eight or even a twelve segment solution to "judge" if the constellation makes sense. It is an "art form." And, at the end, if he gets clusters with correlation strengths of .6 or .7 he feels good. Typically, clusters have even lower correlation scores. And he then has the task of relating those clusters to degree of preference in the market, or association with the "dependent variable," which is usually brand preference or favorite.

We reverse the process. We begin with a very stable preference "dependent variable" we call Acceptor Percentage. A person who has jumped the hurdles to be a brand Acceptor is in fact, very favorably disposed towards that brand. And by implication, he is a "buyer" of those attributes that a brand possesses. That is his segment. So, we kill several birds with one shot - We establish size of market for each brand, the reasons for the brands strengths and weakness, and the demographic and other characteristics of the individuals preferring and Accepting that brand - all of which make up its profile. And, we establish the size of the market interested in each profile, or the segmentation of the market. This provides a very stable segmentation base.

A few last words on segmentation. There are two important issues often overlooked in segmentation.

People develop preferences based on availability

The first is distribution effect. People develop preferences based on availability of solutions. They get familiar with a solution and grow to favor it. In other words, environmental conditioning competes strongly with heredity in things like preference for spicy versus bland, chocolate versus vanilla, price versus prestige, etc. And brands have often developed and are distributed in non-orderly ways.

People have multiple "favorites"

The second is related. People do not have only one favorite or acceptable brand. For example, a loyal user in the coffee business is one who gives 55% of her purchases to brand A, and usually distributes the rest among two or even three other brands, depending on which has better shelving or display or feature pricing today. This is generally true in most markets: A person may usually prefer "healthy," but she also likes "greasy and savory." There is in fact a "distribution" of preferences WITHIN each person. This is very hard to capture with traditional segmentation, but immediately apparent when you look at patterns of brand preference. Again allowing windage for distribution effects, brand preferences and profiles measured by Acceptor Score is the most discriminant and reliable way to determine the real segmentation in a market.

Clever use of the Linescale Market Assessment Study can expose what the real segmentation in a market is - and suggest what to do about it.